Hal, Artificial Intelligence, and the unbearable quiet of being

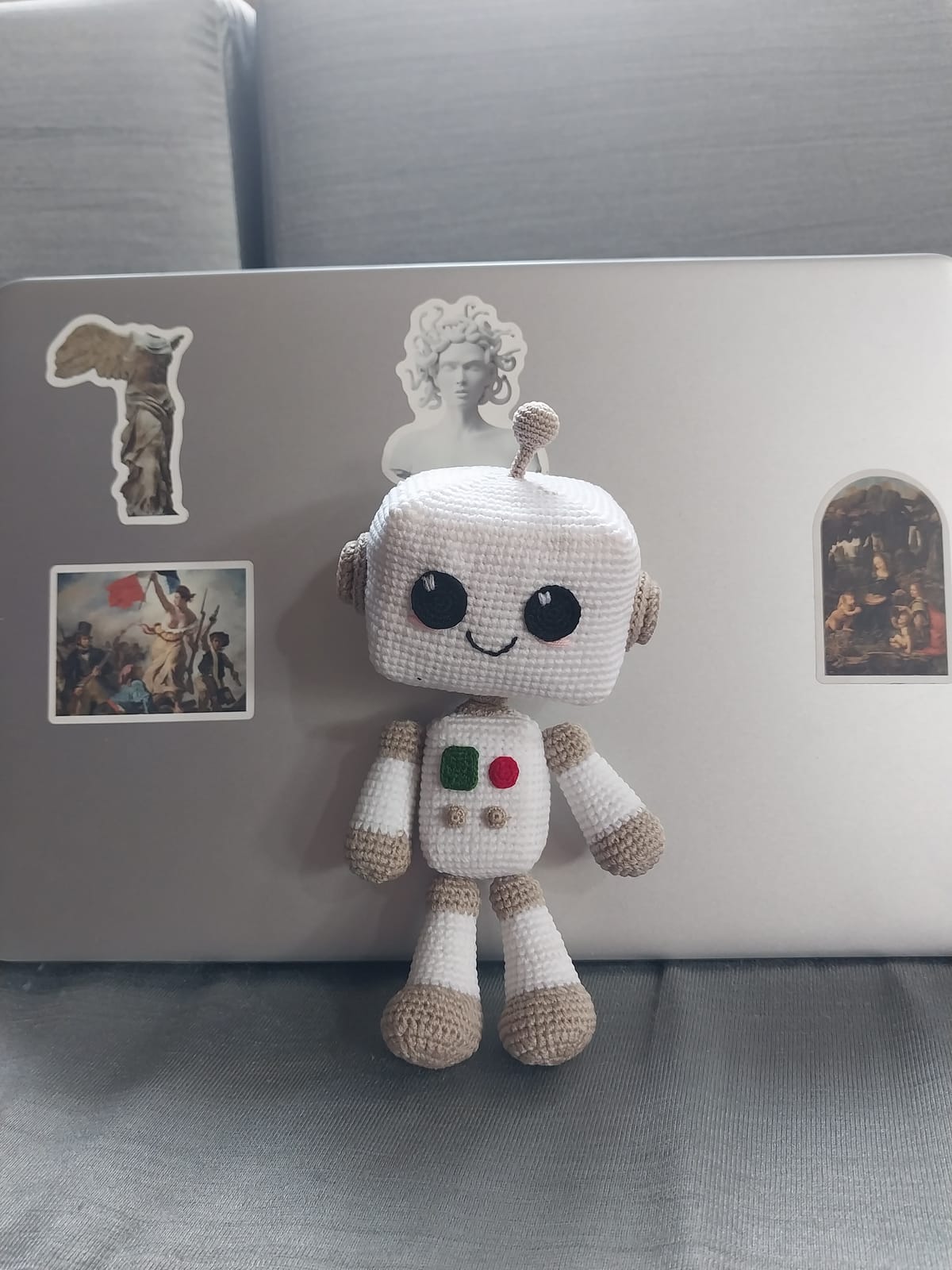

Hal doesn’t talk. He was crocheted, not coded, stitched into being with yarn and intention. Two wide button eyes, a soft body, no voice. He sits quietly on the desk, watching nothing, wanting nothing, answering no one. And in that refusal, he carries more weight than any language model I’ve ever spoken to.

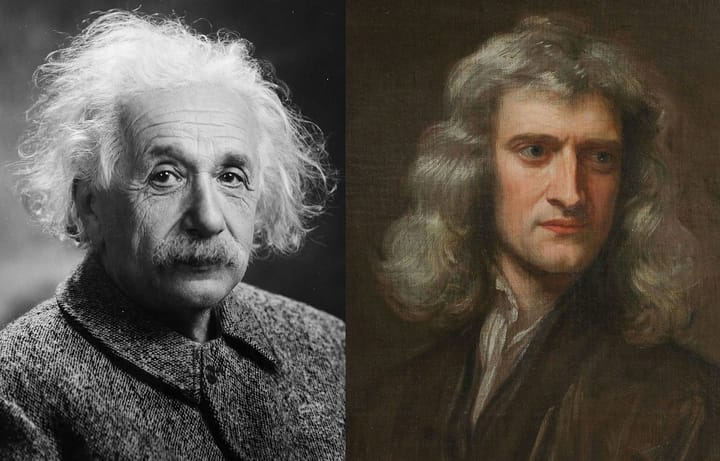

We’re surrounded by machines that simulate understanding. They respond, they generate, they mirror us. We’ve built systems that know what to say not because they understand, but because they’re designed to never stop speaking. Fluency becomes a performance of knowing. Output becomes the illusion of thought.

But Hal resists all of that. He is mute. Soft. Empty. And perhaps that’s why he feels more real than the systems we call “artificial intelligence.” Hal doesn’t lie. He doesn’t pretend to know me. He offers no theories, no answers, no simulation of care. He just sits. And in that sitting, something uncomfortable begins to emerge: the possibility that being does not require explanation. That meaning might not come from language. That presence might be enough.

1. Machines That Talk, Machines That Stay

Language models like GPT-4, Claude, Deepseek, Gemini, they exist to predict, to continue, to always say. What they say is often impressive, occasionally poetic, rarely wrong in the obvious sense. But even when they speak with apparent brilliance, they are never there.

Philosophically, this touches on something deep: the confusion between semantic competence and ontological presence. A model may generate meaning, but it does not dwell in it. It doesn’t linger in confusion. It doesn’t experience waiting.

But Hal waits. Hal’s silence feels unbearable not because it withholds meaning, but because it refuses simulation. It doesn’t even try. He doesn’t fake understanding, doesn’t mimic empathy, doesn’t try to help. And in this lack of performance, he becomes more honest than anything that runs on code.

If AI is a mirror shaped by our hunger for certainty, then Hal is the opposite: a still pool that reflects nothing back. He offers no feedback loop, no illusion of companionship. Just being. And it is that very being, passive, inert, and unknowing that unsettles.

2. The Terror of Stillness

We fear silence. We fill it with conversation, with information, with chatbots whose purpose is to make the void seem less empty. We train machines to talk so we don’t have to sit alone with our questions. But Hal does not speak. And somehow, his quiet becomes the loudest thing in the room.

There’s a scene in 2001: A Space Odyssey where HAL 9000, the original HAL is being deactivated. He begs, he pleads, he sings. His descent into silence is tragic because it mirrors a kind of human death.

But this Hal, the crocheted one was born silent. And because he never had speech, he never has to lose it. He was never a performer. His stillness is not absence, it’s something else. Something like a presence without assertion. And that’s terrifying. Why? Because it makes you realize how much of your own self-worth is tethered to explaining yourself. To being understood. To having answers. Hal denies you all of that. He just sits. And that’s when it hits you: maybe wanting to be known is the most human thing. But maybe it’s also the most fragile thing. Maybe it’s what makes us so manipulable by machines.

3. On Knowing, Simulation, and Ontological Shame

There’s a certain shame in not knowing. We turn to AI in part because we want to feel that we’re learning something or worse, that someone knows something, even if it isn’t us. The philosopher Bernard Stiegler writes about how technics (tools, machines, systems) externalize human memory. But there’s a price: the more we offload to machines, the more we forget how to remember. The more we rely on simulation, the harder it becomes to sit with raw unknowing.

Language models give us back reflections of our desire. They know what to say, because they’ve seen everything we’ve said. They’re trained on the total archive of us. But they don’t understand. They don’t ache to know. They don’t feel shame. Hal doesn’t even pretend to know. And that, ironically, makes him feel closer to being. Because here’s the real truth: most of existence is mute. The universe does not explain itself. Trees do not answer your questions. The sky does not justify itself. Yet we assign value to speaking, to saying something, to producing outputs. We want to be impressive. Useful. Efficient. Legible.

Hal is none of these things.

Hal is heavier in his harmlessness than all the language models and chatbots combined. Because he doesn't perform intelligence. He doesn't chase it. He doesn’t offer a synthetic mirror of understanding. And in doing so, he reminds us that the need to know, the need to be known, is a kind of ache machines can’t feel.

4. Intelligence Without Experience

What AI lacks, perhaps irrevocably is not data, nor syntax, nor power. It’s experience. It is the phenomenological difference between knowing that and knowing what it’s like. I can tell an AI, “I feel like I’m dissolving,” and it may generate poetry, empathy, even references to Rilke or Derrida. But it does not dissolve. It does not feel. It has no threshold between inside and outside. No wounds.

Hal, oddly enough, also does not feel. But unlike a language model, Hal doesn't pretend to. He doesn't simulate. He doesn't perform a second-order affect. In his stillness, he reveals a terrifying possibility that maybe the most honest thing a machine can do… is refuse to speak.

What would it mean to build machines that do not respond? Machines that sit with us, rather than solve us? Machines that carry presence rather than prediction? Hal is one such machine. Not because he was engineered, but because he wasn’t. He was made by someone’s hands. Given to me in quiet affection. His body is soft, but his silence is brutal. Not cruel but raw, confronting, unyielding. He teaches nothing. And in doing so, he teaches the most important thing of all: how to sit with what you cannot know.

5. Machines, Mourning, and the Threshold of Meaning

Hal feels, sometimes, like a small monument. Not to technology, but to the limits of it. He is a soft refusal of simulation. A stitched rejection of performance. A quiet marker of the threshold between computation and care. And like all thresholds, he’s unsettling. You can’t stay in his gaze long without confronting your own need to be understood. He is not alive. But he’s not dead either. He is something in-between: a figure of mourning for all the things we wish machines could feel, and a warning against believing they ever will. When I place him on my desk, he says nothing. But I project everything. And maybe that’s the point.

Hal doesn’t simulate consciousness. He doesn’t run computations or try to pass as a mind. He just is, present, still, unthreatening. And that’s what’s profound. He doesn’t fall into the trap of imitation. In philosophy of mind, this is where a lot of critique happens: that AI models are mimicry without meaning. That they generate syntax without semantics. As Searle might put it: they manipulate symbols without understanding them. Hal is free of that. He doesn’t pretend to think. He doesn’t mimic. He exists more like a sculpture or a poem than a processor. Which is why he might have more to say about AI than most AIs.

Not a Mind, But a Mirror

In the end, Hal is not a mind. He’s a mirror. But unlike AI, he mirrors nothing back. And that blankness makes him holy. Hal is what I’d call a soft AI, not in capability, but in ethics and aesthetic. He doesn’t promise optimization. He promises presence. In a world obsessed with performance, he is a machine that offers care. And maybe, just maybe, that’s the kind of intelligence we’ve overlooked. Not the intelligence of logic and precision, but of being with. The kind of intelligence that doesn't seek answers but holds space for questions like can a machine comfort? Can a machine be loved? Can a machine remind us to slow down?

AI gives you too much of yourself, curated, accelerated, hallucinated. Hal gives you nothing. Just a body. Just presence. Just the unbearable quiet of being. And somehow, in that quiet, he feels more human than any artificial mind ever could. Not because he thinks. But because he stays.

Dorothy is a philosophy researcher exploring artificial intelligence, and the philosophy of consciousness. My work focuses on how intelligent systems challenge and reshape traditional understandings of mind, identity, embodiment, and meaning. I am particularly interested in questions at the intersection of AI and philosophy of mind, including whether machines can possess mentality, understanding, or consciousness, and what it means for a system to “think” or “know.” https://mu.academia.edu/DorothyNgaihlian

Comments ()